Posted: Thursday 11th July 2019

Author: Claudia Cox

A criticism of examinations is that they are limited in their capacity to assess students authentically using scenarios they may realistically encounter in the field. In fact, Times Higher Education wrote recently on this particular subject, acknowledging that while there are a number of factors which "[make] it difficult for universities to unilaterally transform their assessment practices... It seems that everyone - staff, students and employers alike - would benefit from a fresh approach." (Anna Mckie, 2018)

Examinations certainly still have value in assessment, not least because they ensure the work submitted is completed by the student, but we're starting to see academics thinking up some really exciting, experimental (fresh!) approaches which take advantage of the digital format and reveal the potential for hybrid exams.

We've had two digital exams in our most recent exam period making use of the whitelisting feature which grants students access to specific external resources on the internet whilst the exam still takes place in a secure locked down environment. Previously we've seen this tool used to provide access for all students to read-only documents and reference material, such as lengthy case studies or formulae sheets, but now these resources are being applied at an individual level so that each student can actually interact with the items to demonstrate real knowledge assimilation and problem solving.

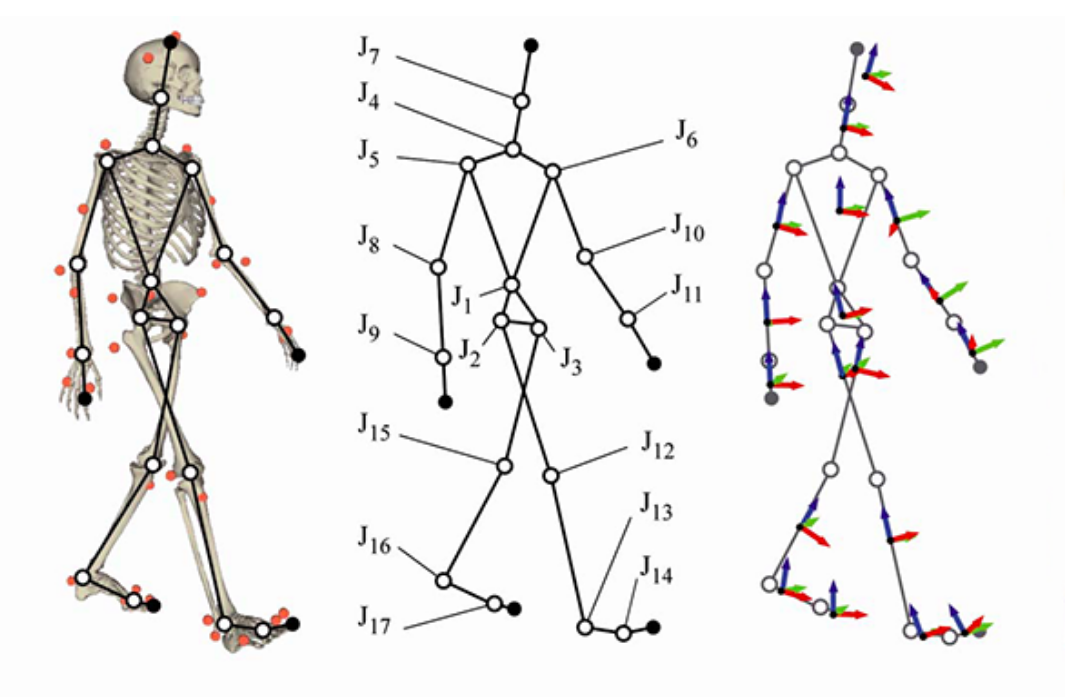

A Case Study in Sports Science (approx. 74 students)

Image source.

The brief: A Biomechanics lecturer aimed to get students to analyse data on spreadsheets containing over 1000 rows of information, an impossible feat to replicate on paper. This task assessed their knowledge of data analysis methods and ability to display results.

How this was done: Multiple copies of the spreadsheets were hosted online, and a unique link was produced for each copy. This was initially tested by staff, then again with the students in an exam revision session. This last step was particularly useful; the students gave feedback which allowed the academic to modify his real exam questions and minimise ambiguity or uncertainty, e.g. “Can we also have the question instructions in the actual spreadsheet as well as the exam?" "Can you highlight a specific area on the spreadsheet where we should put our answers and our working out?” Well in advance of the start date of the exam, the individual spreadsheets were allocated to each student in the exam software using a naming convention to avoid any risk of giving two students the same copy.

How did it go: Very well! The revision session for students served as useful preparation and the number of queries about how to use the online spreadsheet during the exam was minimal (3~ students). The benefit of their feedback allowing the academic to present the questions as clearly as possible helped considerably.

Hurdles encountered: A student accidentally deleted an essential column of data on their spreadsheet and continued working without realising it. We had back-up data and simply copy/ pasted this information back in, resolving the issue in less than a second, but it highlights that being prepared and having back up plans and methods of recovery is essential.

What could have been better: We can make improvement to the marking process in the future. As there was a mathematical answer, it could have been set up so that students did their working out and analysis on the spreadsheet but returned a single answer into the exam (and this could have been automatically marked!).

Using a question type that allows file uploads in the exam could also have been used so that they were still directly accessible within the exam system for marking. Still, this was a very encouraging first try.

A Case Study in Computer Science (approx. 68 students)

Image source.

The brief: A lecturer in Digital Media and Games designed a charming diegetic examination which featured three levels of progressively harder multiple choice questions, followed by a "boss level" which asked students to produce a report or prototype document for a game based on a client brief. Students were given access to Excel, Powerpoint, Word, and code editors. They also had the ability to include drawings and diagrams.

How this was done: Again we used the individual whitelisting feature, this time to provide access to a variety of resources, and carried out testing prior to the exam. The students already had prior experience of a similar question format conducted in class tests. In this exam we used the file upload question type which allowed students to download offline versions of their work and then reupload these back in the locked down exam environment for the marker to view.

How did it go: Great! Again, student queries about the use of resources were minimal. The number of tools available allowed students to tackle this question in varied and creative ways. We observed students making highly detailed and colourful diagrams and using the Powerpoint slides to create quasi-functional mock-ups of title screens for their proposed games.

Hurdles encountered: While it was nail-biting to observe and orchestrate, there were no significant challenges encountered during this exam.

The final verdict

The potential to enhance digital examinations with more authentic assessment is clear. This is a valuable practice which can increase student satisfaction (Lincoln Then James & Riza Casidy, 2018) and encourage the demonstration of skill sets that are much desired in relevant fields and more broadly by prospective employers. However, this is not without its limitations. Consideration must be given to issues like scalability, whether students and supporting teams are adequately prepared given how radically different these exam questions can be, and whether students are being assessed comparably in light of equivalent degrees at other institutes. In our current experience of implementing this approach in digital exams:

- Rigorous testing is needed to ensure that the examinations will function as intended.

- Some form of student involvement is essential, ideally introducing them to a similar format in class tests and including them in later stage testing. It doubles as exam preparation for the student and further development of the questions for the academic, which must always be an iterative process.

- The extra setup required for these exams (i.e. producing duplicate resources, assigning these resources to individual students) is very labour intensive and is currently best suited to smaller cohorts. For this reason it may also be sensible to only include 1 or 2 questions of this nature in an exam paper.

- Always produce extra copies of resources, a few more than the official number of exam candidates. This allows for any unexpected additional candidates joining the examination and also serves as a restoration/ backup point if a student accidentally deletes an essential part of the initial resource which cannot be recovered using typical methods. Additionally, ensure that the key members of the supporting teams all have access to these resources where appropriate.

- The additional resources that students are permitted to access should always be highlighted on the cover sheet of the examination so that there is no confusion for supporting/ invigilation teams.

- If the additional resources allow open access to the internet or files in offline storage in some capacity, careful consideration must be given to security implications for other elements of the exam; i.e. will this potentially give the students the tools needed to cheat?

References

Anna Mckie (2018) Time to get real, Times Higher Education, 23/05/19, p.36-41

Lincoln Then James & Riza Casidy (2018) Authentic assessment in business education: its effects on student satisfaction and promoting behaviour, Studies in Higher Education, 43:3, 401-415, DOI: 10.1080/03075079.2016.1165659